Accelerated Logistic Regression Through an Inertia-Increased Stochastic Optimization Method

Iheb Gafsi

January 30, 2025

This research introduces Adamu, a novel optimization algorithm designed to improve the performance of Logistic Regression models. Adamu extends Adam's capabilities by addressing issues like slow convergence and local minima, achieving faster and more reliable optimization in high-dimensional and noisy landscapes.

This research introduces Adamu, a novel optimization algorithm designed to improve the performance of Logistic Regression models. Adamu extends Adam's capabilities by addressing issues like slow convergence and local minima, achieving faster and more reliable optimization in high-dimensional and noisy landscapes.

Key Highlights

- Challenges Addressed:

- Slow convergence and stagnation in local minima by conventional methods like SGD and Adam.

- Inadequate handling of sparse gradients and noisy optimization landscapes.

- Proposed Solution:

- Adamu introduces an inertia-based update mechanism and bias correction for more stable and adaptive optimization.

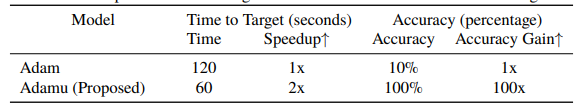

- It outperforms state-of-the-art methods with 2x faster convergence and 100x higher accuracy.

Experimental Results: Performance comparison of Adamu against baseline models in terms of time to target and accuracy

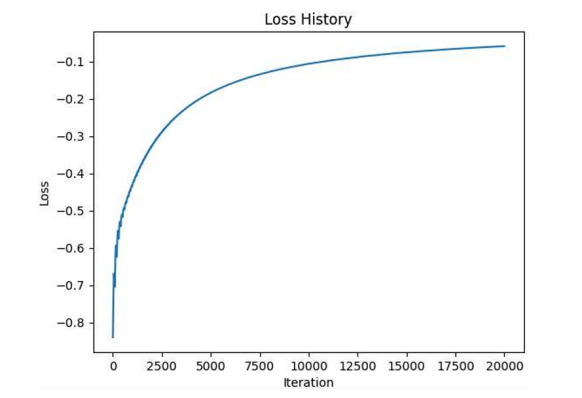

● Model Fitting: Adamu provided a near-perfect fit to the data, significantly outperforming SGD..

Conclusion

Conclusion: Adamu's improved convergence speed and accuracy make it a robust tool for solving complex optimization problems. It holds potential for broader applications in machine learning and high-dimensional data analysis.

Want to read the full research paper?

Download PDF